John Fairbairn wrote:

First a comment. I have mentioned several times before that pros usually don't count the way we do. They can look at the board and see inefficiencies and simply count those (there aren't so many in pro games). Generally the side with the most inefficiencies is behind, but obviously some inefficiencies are worse than others and pros seem to have enough skill to assess how much each one is worth (I expect, though, some are significantly more skilled at this than others). Because they have this feel for the game, they stress efficiency of plays an awful lot.

Well, I rarely count points in games, and usually use the "sum of how bad I think my mistakes were" vs "sum of how bad I think opponent's mistakes were" as the basis for if I think I'm leading or not. I did actually count a few times in my

recent title match game: in the middlegame my count suggested playing to defend my 3-3 could be ok but there was just too much play left in other areas of the board I wasn't confident to play a conservative gote move, and then things got out of hand and I never got the chance, and in the endgame I counted it was super close so did some reading instead of being lazy and playing (just!) unnecessary defence. I have noticed that in his commentaries Myungwan Kim counts territory a lot (though often does the "white needs to make at least 15 in this hard to count area to be even" approach) whereas Michael Redmond rarely does.

John Fairbairn wrote:

I've understood this, and have even been able on occasion to simulate their behaviour, but mostly I have treated it as a little bit of a party trick by them. However, my first forays with Lizzie/LZ have astonished me because it spots inefficiencies very early in the game and marks them down heavily. For example, there was a joseki where a connection was needed and two were available. The pro played the solid connection but LZ preferred a hanging connection. It hardly seemed to matter because there was no immediate danger, no shortage of liberties, nothing special at all - purely a case of long-term efficiency. But LZ adjudged the pro's solid move a whopping 6 percentage points worse. That pattern seems to emerge elsewhere and so I now understand even better why pros care about efficiency of plays, but at the same time it seems they have not entirely mastered all the elements of good shape.

I think far too much judgement by pros of josekis (or at least what I've gleaned of it) is based on reference/tewari/comparison (where such comparisons can accumulate asymmetric errors) to other results they think (possibly mistakenly) are ok, instead of trying to make more absolute judgements based on where the stones end up. "Well we played these moves which are probably okay and it started even so it ended even" isn't great. Robert Jasiek's territory/influence stone counting approach is a nice idea in that direction, but just not good enough to be useful. Basically you need a massive function with gazillions of parameters finely tuned to judge a multitude of facets of a position. Hang on a minute, I just described a neural network!

6% is quite a big mistake for a pro, but not that unusual. If you want to see lots of big % drops to shatter the illusion pros know what they are doing then I suggest reviewing pro games with Elf

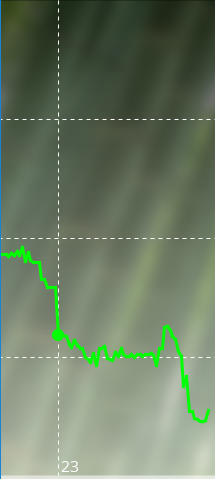

. I was recently reviewing the 5th Kisei title match of Shuko vs Otake with Go world commentary and half the opening moves were 8-12% point mistakes according to Elf (lots of splitting or extending on side moves, even before bots that had gone out of fashion as not active/efficient enough). LZ #157 was much more tame, just rating them -1 to -3%. Here's a graph from LZ (the horizontal dashed lines are at 25/50/75%).

Attachment:

Kisei5g1LZ157.PNG [ 106.91 KiB | Viewed 6860 times ]

Kisei5g1LZ157.PNG [ 106.91 KiB | Viewed 6860 times ]

You can see the little zigzags as they play side moves in the opening. That big drop on move 23 of 10% received no comment. Shuko did criticise Otake's move a few later (LZ agrees it was bad, -3%) and says "This move surprised me. I think it must have been a slip. Black would have had quite a good game if he had connected at 34 instead". LZ thinks the damage was already done and white would still be 70%, though that is with 7.5 not 5.5 komi. By move 35 "... makes it clear how badly Black has done here. The general feeling was that he [Otake] had suffered a loss equivalent to the komi. Some professionals commented that the game had already been decided". LZ with it's 7.5 komi says white was at 76%. Something to note is LZ didn't anticipate Otake's good move 29 beforehand so its judgements preceding that are less trustworthy, but once it gets to that move it does find it after about 30,000 playouts (so non-GPU people will probably never find it). You can compare this graph to the one with my title match review to see the difference in sizes of mistakes between top pros of the 80s and 2 EGF 4ds. (This is based on the assumption LZ is stronger than the players, which is probably true but might not be in some complicated fights or ladders where it can have delusions, though these tend to disappear with sufficient playouts).

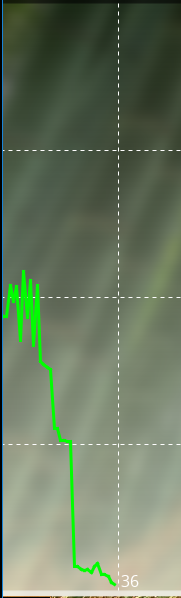

Here's the graph from Elf v1. You can see the much bigger swings from opinionated Elf as they play side instead of corner moves. What was -10% to 70% for white for LZ 157 is -21% to white 95% for Elf. White is at 99% by move 35. Elf thinks Shuko did make some mistakes in the middle game which took him to only 80% but that didn't last long.

Attachment:

Kisei5g1Elfv1.PNG [ 102.25 KiB | Viewed 6860 times ]

Kisei5g1Elfv1.PNG [ 102.25 KiB | Viewed 6860 times ]

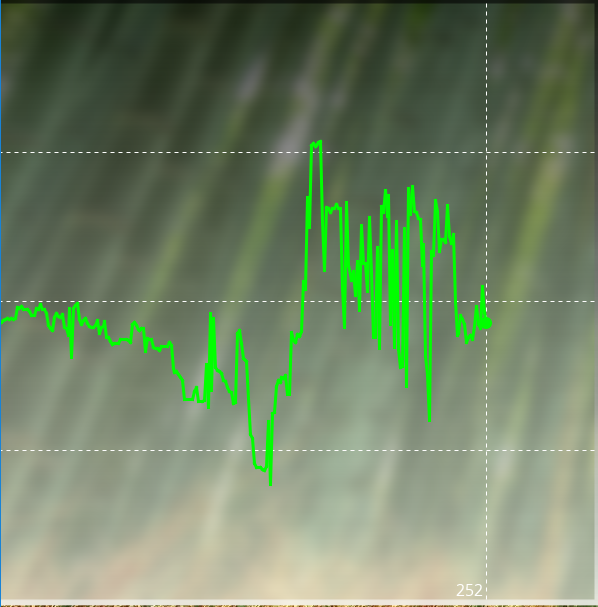

And another LZ157 graph for comparison, a half-pointer from

Park Junghwan vs Mi Yuting from a few days ago (at 7.5 komi). The deviations are generally smaller than Shuko vs Otake, so LZ is supporting the belief that top pros of today are stronger than of the past (though in opening they can be playing bot josekis which LZ will approve of (unless they do it in bad situations, which does actually happen a lot) but maybe that's not a caveat if such josekis are actually better

) Btw, white's 40 was the biggest mistake of the opening at -9%, can you find the better move? It's totally logical and a beautiful tesuji that exploits black's timing mistake. LZ is good at this sort of sharpness in fighting. That big spike at move 110 is for the f11/g11 exchange that I couldn't understand and neither can LZ, saying it was bad. I'd really like to know what the players were thinking: did they see something LZ didn't which when shown LZ agrees was important (well done them), or did LZ not see it but when shown it thinks it's bad and sticks to its opinion, or did LZ see and dismiss it as bad? Also some of the big zigzags later I think are due to foibles in LZ's analysis, for example it wants to play the profitable sente exchanges from t13 for white quite early, so when white does something else it sometimes doesn't see (at 10k playouts) that white will be able to play them later too so thinks the other move is worse when really it's just a different order of chunks. Being a close game big swings could just be 1 point mistakes that change the winner. Also it wastes playouts throughout the game wanting to play black's a13 sente move, which humans can save for a ko threat with little thought and then stop wasting brainpower on. And generally I think the fighting might be too complicated for LZ on moderate playouts that I wouldn't confidently say they must be making big mistakes here. With more time I can try to tease out the truth, but really a stronger player than myself is needed for a reliable interpretation (Elf can help).

Attachment:

ParkvsMiLZ157.PNG [ 415.12 KiB | Viewed 6844 times ]

ParkvsMiLZ157.PNG [ 415.12 KiB | Viewed 6844 times ]

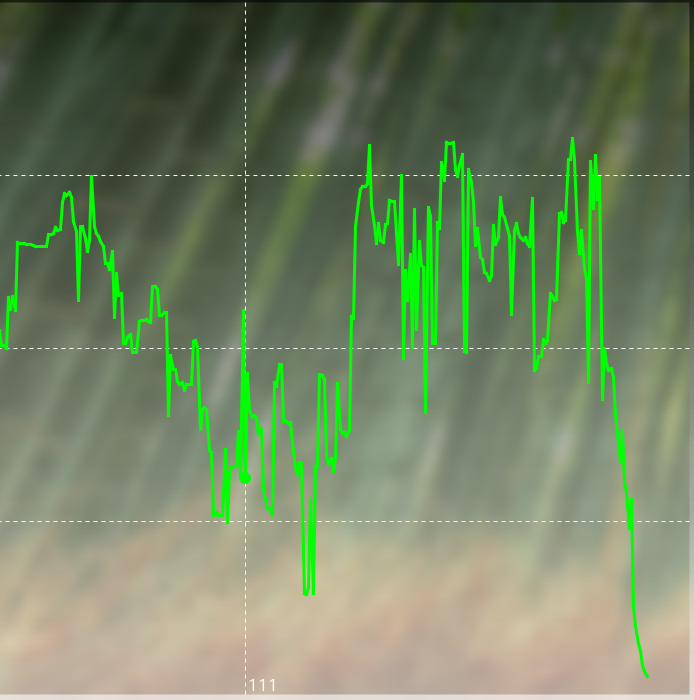

Elf v1 on only 1k playouts on whole Park vs Mi. Noticeable that in the early game it prefers black and generally sees a lot more going on. For starters it thinks after parallel 4-4s white answering knight approach with a knight move is a 7% mistake (Elf v0 thought it was fine). Has Elfv1 discovered how to make better use of black's sente advantage? And it thinks Mi only won very late with Park connecting the ko, though I don't know if it can correctly read/intuit the deciding ko fight with few playouts. Update: playing out the ko fight as Elf wants sees the win gradually become white's, so it seems it was mistaken to think black was winning before.

Attachment:

ParkvsMiElf1.PNG [ 538.4 KiB | Viewed 6841 times ]

ParkvsMiElf1.PNG [ 538.4 KiB | Viewed 6841 times ]